The Institute for Work & Health (IWH) is known globally for its in-house expertise in synthesizing the best available evidence on what works to protect and improve the safety and health of working people. We are especially known for our expertise in conducting systematic reviews. IWH began conducting systematic reviews and other types of knowledge synthesis in 1994. This activity expanded considerably in 1996 when we began housing Cochrane Back and Neck, one of over 50 international systematic review groups in Cochrane. Then in 2005, IWH created its Systematic Review Program—a program of research dedicated to conducting systematic reviews that answer important questions relevant to stakeholders in workplace health and safety.

Where systematic reviews fit into our concept of OHS evidence

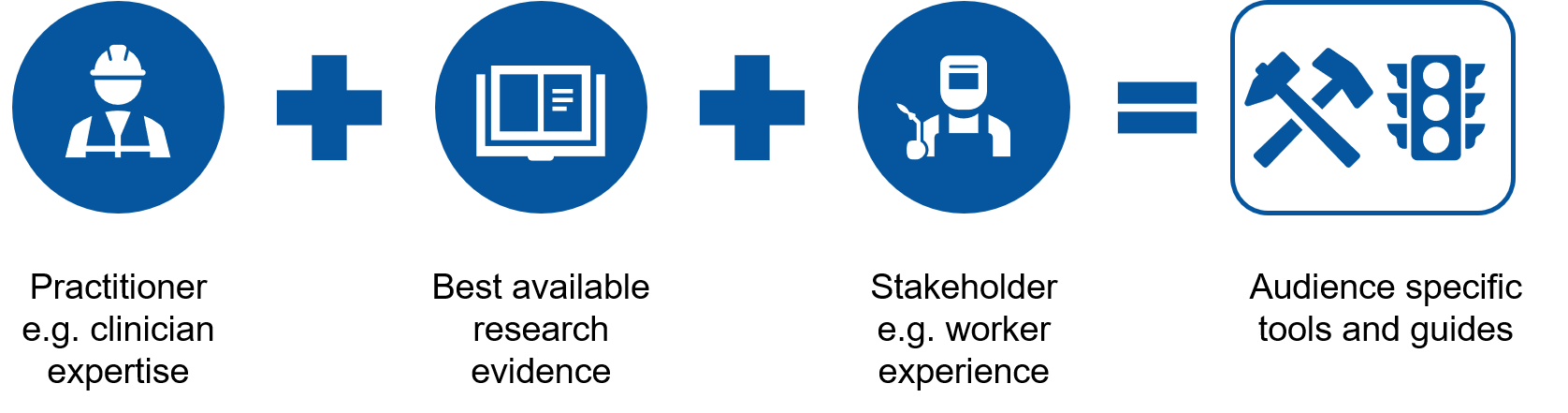

At the Institute for Work & Health (IWH), we contribute to an evidence-based approach to informed decision-making in occupational health and safety (OHS). We consider three sources when it comes to building OHS evidence:

- the best available research evidence;

- practitioner (e.g. clinician, workplace) expertise; and

- stakeholder (e.g. worker) experiences, including their values, expectations and preferences.

We then use these sources to develop key messages targeted to relevant stakeholders. And, often, we use these sources to develop evidence-based practical tools or guides.

Systematic reviews fall into the first category—best available research evidence. More specifically, the results of systematic reviews, combined with direct input from practitioners about their expertise and from stakeholders about their experiences (the latter two collected via surveys, interviews and focus groups), all contribute to building a practical evidence base for workplaces.

Our six-step approach to OHS systematic reviews

A systematic review is a type of research study. It aims to find an answer to a specific research question by summarizing the results across existing scientific studies. This provides evidence that is stronger and more certain than the results of individual studies.

When conducting systematic reviews, our aim at the Institute is to conduct a review of the literature that is comprehensive, systematic, rigorous and transparent. We use a process modelled on, and adapted from, the process used by Cochrane. It includes six steps, which are described below.

One of the innovative aspects of our systematic review process is our engagement of knowledge users and collaborators. They are involved throughout the review, beginning with the refinement of the research question(s) through to the extraction and communication of the messages (see figure below).

Step 1: Develop the question

To initiate a review, it is important to develop a well informed and constructed research question, optimally following a PICO (Population, Intervention, Comparator and Outcome) framework. In other words, the question clearly indicates which population of individuals is being studied, what is being done to them, compared to what, and what is the outcome of interest. For example, the following is a systematic review question that follows the PICO framework:

Population = persons with low-back pain

Intervention = alternative therapies

Comparison = usual care

Outcome = improved health

Based on this, the research question becomes: Do alternative therapies improve the health of patients suffering from back pain?

Step 2: Conduct a literature search

To find sources of evidence, we work with an information specialist to design a comprehensive search strategy that is based on the PICO parameters and is agreed upon by the project team.

Step 3: Identify relevant articles

Next, we filter the studies retrieved in the search through the use of predefined inclusion/exclusion criteria, eliminating those studies that are not deemed directly relevant to the question being asked.

Step 4: Appraise the quality of articles

Relevant studies are then appraised for their methodological rigour (i.e. were they conducted properly), using predefined criteria.

Step 5: Extract the data

The research team then summarizes the key contents of each retained study that meets the previous criteria, focusing on elements that will help answer the research question.

Step 6: Synthesize the evidence

The relevant evidence is then synthesized, which involves an assessment of the quality, quantity and consistency (based on a “best evidence synthesis” approach developed by Robert Slavin: https://doi.org/10.3102/0013189X015009005). Using this approach, and working with stakeholders, we operationalize this best evidence synthesis approach by ranking the level of evidence as strong, moderate, limited, mixed and insufficient, according to the criteria outlined in the chart below.

As the chart shows, when the level of evidence is strong, we feel we can provide practice recommendations. When the level of evidence is moderate, we provide practice considerations. When the level of evidence is lower than moderate, we feel we cannot provide directions for practice based on the scientific literature at that time.

| Level of Evidence | Minimum Quality* | Minimum Quantity | Consistency | Strength of Message |

|---|---|---|---|---|

| Strong | High (H) | 3 | 3H agree; If 3+ studies, ≥ 3/4 of the M & H agree | Recommendations |

| Moderate | Medium (M) | 2H or 2M & 1H | 2H agree or 2M & 1H agree; If 3+, ≥ 2/3 of the M & H agree | Practice considerations |

| Limited | Medium (M) | 1H or 2M or 1M & 1H | 2 (M and/or H) agree; If 2+, >1/2 of the M & H agree | Not enough evidence to make recommendations or practice considerations |

| Mixed | Medium (M) | 2 | Findings are contradictory | |

| Insufficient | Medium quality studies that do not meet the above criteria | |||

| *High = Score >85% in quality assessment; Medium = Score ranges from 50-85% in quality assessment | ||||

How we contextualize OHS evidence

We contextualize evidence from systematic reviews for our stakeholders both by ensuring stakeholder input to the research question and in the messaging from our reviews to ensure the evidence maybe applicable to them.